Model-based Planning in Deep Reinforcement Learning: A Case Study

- Tech news

- July 28, 2023

- No Comment

- 58

Introduction

The emergence of RL has transformed the process of training intelligent agents to make astute judgments in complex environments. Balancing exploration and exploitation in RL settings is essential for achieving success. By integrating an environment model, MBRL can make better choices. This article delves into the vast landscape of MBRL and explores its exciting applications, before analyzing DeepMind’s trailblazing MuZero algorithm that highlights the transformative power of model-based planning.

Understanding Model-Based Reinforcement Learning

A Quick Historical Perspective

In the domain of decision-making techniques, environmental models have been utilized to address challenges successfully. A-Star and tree search constitute venerated strategies. These techniques struggle with continuous environments or extensive action spaces. Infinite possibilities inaction selection produce scalability challenges. MBRL seeks to simplify the task of action choice through optimization methods.

The Challenge of Continuous Environments

Classic planning techniques often encounter difficulties in settings where situations persist indefinitely. Agents can rely on these models to plan ahead and navigate complex situations with confidence.

Action Choices Converted into Optimization Issues

Parametric dynamics modeling enables controlled action. The agent’s ability to tackle complex problems efficiently enables it to make better decisions through optimal planning.

Model-Based Reinforcement Learning Review

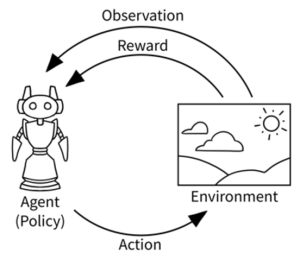

Block Diagram of MBRL

The MBRL procedure involves an entity figuring out the environment, constructing a mental image of it, and applying that picture to influence choices. The diagram shows the iterative sequence visually.

The Loop: Agent, Environment, and Learning Dynamics

The dynamics model forms the basis for effective MBRL by elucidating the transformation processes of the environment. The agent utilizes this model to forecast potential futures and evaluate their results, empowering more astute planning and judgment.

Integrating control and dynamics model instruction for proficiency

An obstacle in MBRL is connecting control (decisions) to the comprehension of the dynamic model. An inadequate match between the two can result in inadequate performance.,

DeepMind’s MuZero Algorithm: Learning to Plan from Scratch

Unlocking Potential through Dream-Inspired Planning

DeepMind’s groundbreaking MuZero algorithm has stirred intense excitement due to its extraordinary dreaming capability. MuZero plans by imagining and assessing possible action sequences, leading to improved choices in unforeseen circumstances

Fusing RL with Model-Based Planning can enhance its capabilities.

The capacity of MuZero to build environment models enables it to effectively address difficult assignments and settings with amplified output., becoming a vital asset in the realm of RL.

Advances in Reinforcement Learning through MuZero

The remarkable capacities of MuZero have pushed the frontiers of what can be attained with model-based RL. Solving issues in situations with limited compensation and uninterrupted motion spaces is its forte.,

.@Next-generation model-based planning in RL looks promising.

The accomplishments of MuZero highlight the hopeful future of model-based planning in RL. The development of new algorithms will progressively become more challenging as technology improves.,

Conclusion

Model-driven planning represents a substantial progression in the realm of Deep Reinforcement Learning. MBRL facilitates smarter planning by incorporating environmental simulations into the decision-making framework. The impressive achievements of MuZero represent the remarkable capabilities of model-based planning in tackling challenges in RL., Future developments in technology will likely unveil astounding capabilities in intelligent agent and autonomous decision-making.